LinkedIn's AI Data Use Sparks Privacy Concerns Among Users

LinkedIn's new AI training policy raises privacy issues. Users can opt out, but past data use is irreversible. The move contrasts with parent company Microsoft's more transparent approach.

LinkedIn, the professional networking platform owned by Microsoft, has recently implemented a new data privacy setting that has raised concerns among its vast user base of over 900 million members worldwide. This change, introduced last week, allows the company to utilize user-generated content for training its artificial intelligence systems.

The new policy grants LinkedIn permission to use various types of user information, including posts, articles, and videos, for AI training purposes. By default, this setting is enabled, meaning users must actively opt out if they wish to prevent their data from being used in this manner.

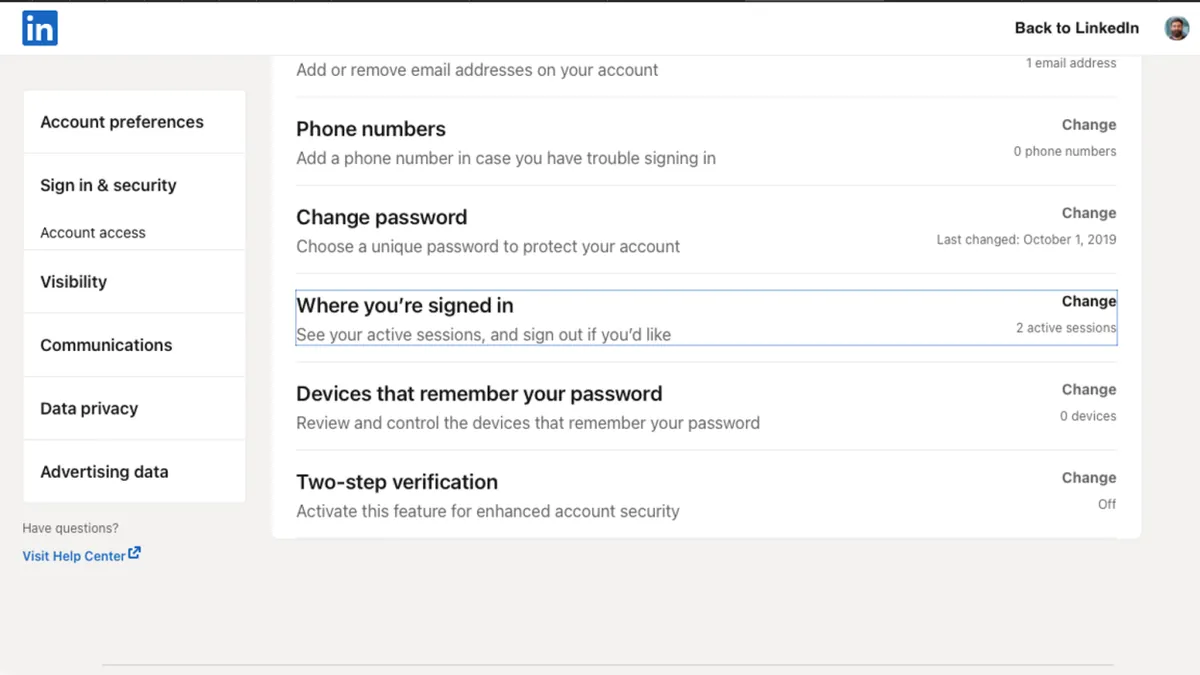

To opt out of this data collection, users need to follow these steps:

1. Log into their LinkedIn account

2. Click on their profile picture

3. Open settings

4. Select "Data privacy"

5. Turn off the option under "Data for generative AI improvement"

It's crucial to note that this opt-out process is not retroactive. LinkedIn has already begun training its AI models with user content, and there is currently no way to undo this previous data usage.

LinkedIn spokesman Greg Snapper defended the company's decision, stating that the data is used to "help people all over the world create economic opportunity" by improving tools for job searching and skill development. This aligns with LinkedIn's mission, as evidenced by features like LinkedIn Learning, which offers over 16,000 online courses, and the "Open to Work" feature launched in 2020.

However, privacy advocates have expressed concerns about the company's approach. F. Mario Trujillo, a staff attorney at the Electronic Frontier Foundation, criticized the opt-out method, stating, "Hard to find opt-out tools are almost never an effective way to allow users to exercise their privacy rights."

LinkedIn's move is not unique in the tech industry. Other major players like Meta (formerly Facebook) and OpenAI have also been using public user data to train their AI models. Meta's director of privacy policy, Melinda Claybaugh, recently confirmed that the company had been scraping public photos and text from Facebook and Instagram for AI training purposes for years.

The contrast between LinkedIn's approach and that of its parent company, Microsoft, is noteworthy. In August, Microsoft announced plans to train its AI Copilot tool using user interactions and data from Bing and Microsoft Start. However, Microsoft committed to informing users about the opt-out option in October and promised to begin AI model training only 15 days after making this option available.

When questioned about the discrepancy between LinkedIn's and Microsoft's approaches, Snapper avoided directly addressing the issue, instead focusing on future improvements: "As a company, we're really just focused on 'How can we do this better next time?'"

This situation highlights the ongoing challenges in balancing technological advancement with user privacy and consent. As AI continues to play an increasingly significant role in shaping online experiences, companies like LinkedIn, which boasts that its "Recruiter" platform is used by over 75% of Fortune 100 companies, must navigate the fine line between innovation and respecting user rights.

"If we get this right, we can help a lot of people at scale."

While LinkedIn's intentions may be to improve its services, the lack of clear communication and the retroactive nature of the data usage have left many users feeling uneasy about their privacy and the value of their contributions to the platform's AI development.

As the debate over data privacy and AI training continues, it remains to be seen how LinkedIn and other tech giants will address these concerns and potentially adjust their policies to better align with user expectations and ethical standards in the rapidly evolving landscape of artificial intelligence.