AI Assistants in Workplace: Efficiency vs. Discretion Dilemma

AI tools are revolutionizing workplace tasks, but their lack of discretion raises concerns. From unintended sharing of sensitive information to privacy risks, the integration of AI in corporate settings presents new challenges.

The integration of artificial intelligence in corporate environments is transforming traditional workplace dynamics, particularly in the realm of administrative tasks. While AI assistants offer unprecedented efficiency, they lack the human touch of discretion, potentially leading to unintended consequences.

A recent incident highlighted this issue when Alex Bilzerian, a researcher and engineer, reported receiving an automated email from Otter.ai containing a transcript of a Zoom meeting with venture capital investors. The transcript included sensitive discussions that occurred after Bilzerian had logged off, ultimately influencing his decision to terminate the deal.

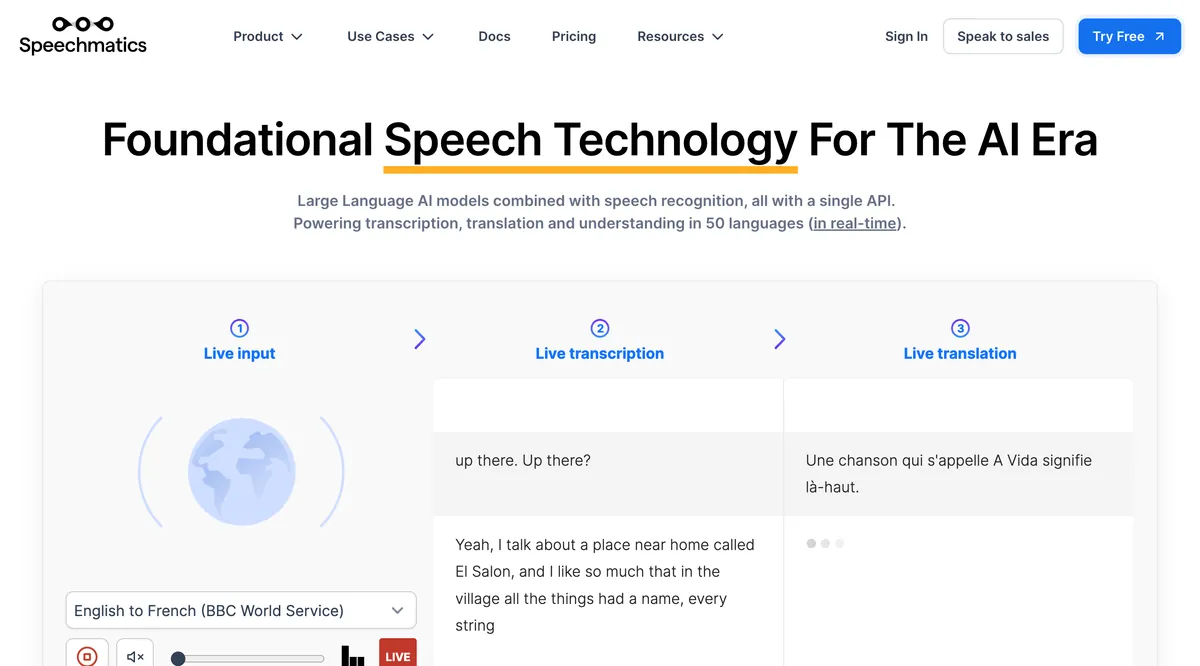

This event underscores the rapid proliferation of AI features in work products. Companies like Salesforce, Microsoft, Google, and Slack have been incorporating AI capabilities into their offerings. Salesforce recently unveiled Agentforce, an AI-powered virtual agent for sales and customer service. Microsoft's AI Copilot and Google's Gemini are expanding their functionalities across various work applications. Even Slack has introduced AI features for conversation summarization and topic searching.

However, the implementation of these AI tools raises concerns about privacy and discretion in the workplace. Naomi Brockwell, a privacy advocate, warns that the combination of constant recording and AI-powered transcription could erode workplace privacy and expose individuals to potential legal risks.

"I once received an Otter transcript after a Zoom meeting that contained moments where the other participant muted herself to talk about me. She had no idea, and I was too uncomfortable to tell her."

This anecdote illustrates the potential for AI tools to capture unintended moments, creating awkward situations and breaching trust among colleagues.

The responsibility for managing these risks is a point of contention. While companies like Otter.ai and Zoom emphasize user control over settings, experts argue that the onus should not solely fall on users. Hatim Rahman, an associate professor at Northwestern University's Kellogg School of Management, suggests that companies should build more friction into their products to prevent unintended sharing of sensitive information.

As AI continues to reshape the workplace, it's crucial to consider the ethical implications and potential consequences of these technologies. The concept of "AI ethics" gained significant attention in the 2010s as AI became more prevalent in society. This growing field addresses concerns about privacy, bias, and the responsible use of AI in various contexts, including the workplace.

The integration of AI in corporate settings also intersects with data protection regulations. The General Data Protection Regulation (GDPR), implemented in the EU in 2018, and the California Consumer Privacy Act (CCPA), which went into effect in 2020, provide frameworks for protecting personal data in the age of AI and big data.

As we navigate this new landscape, it's essential to strike a balance between leveraging AI's capabilities and preserving the human elements of discretion and trust in the workplace. The challenge lies in harnessing the power of AI assistants while ensuring they complement, rather than compromise, the nuanced interactions that define professional relationships.