California Enacts AI Laws to Safeguard Elections and Protect Performers

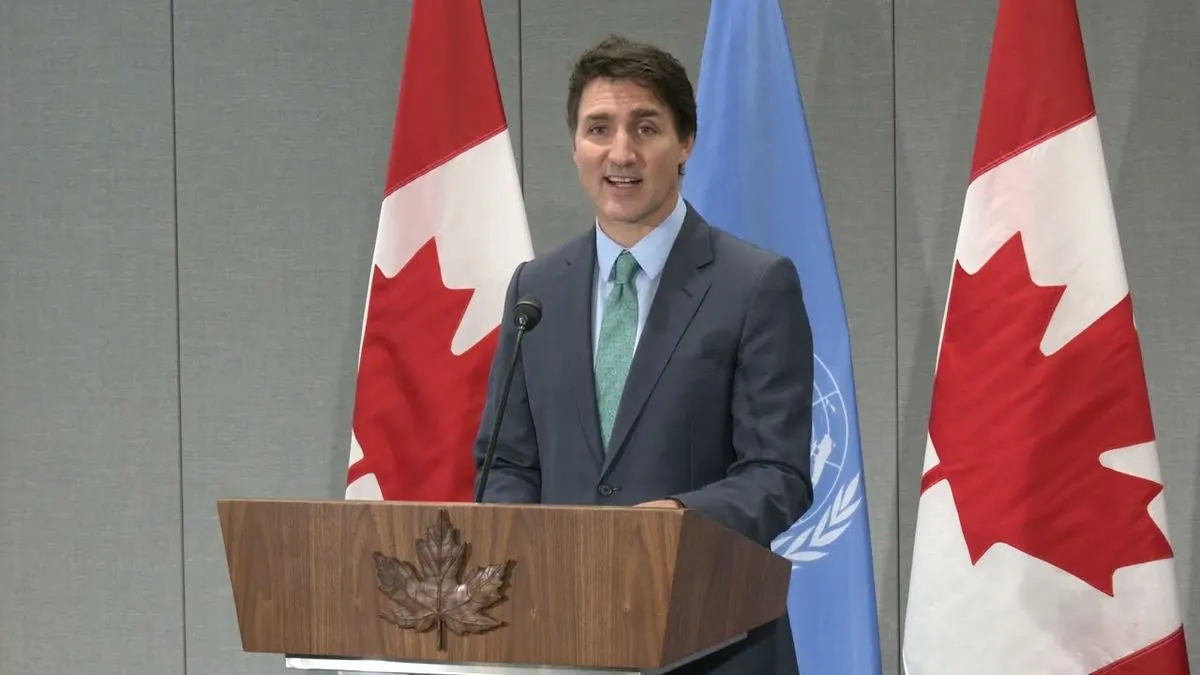

California Governor Gavin Newsom signs multiple AI-related bills, addressing deepfakes in elections and protecting Hollywood performers' likenesses. The measures aim to balance public welfare with the rapidly evolving AI industry.

Gavin Newsom, California's governor since 2019, has taken a significant step in regulating artificial intelligence by signing several bills into law on September 17, 2024. These measures aim to address the growing concerns surrounding deepfakes in elections and protect the rights of performers in the entertainment industry.

California, the most populous U.S. state and home to Silicon Valley, has been at the forefront of technological innovation. With 32 of the world's top 50 AI companies based in the state, California's government faces the challenge of balancing public welfare with the ambitions of a rapidly evolving industry that employs over 1.8 million people.

The new legislation focuses on two main areas: election integrity and performer rights. One of the key bills, A.B. 2655, requires large online platforms to remove or label deceptive, digitally altered, or AI-generated content related to elections during specific periods before and after they are held. This measure addresses the increasing worry about deepfakes circulating during the 2024 campaign, a concern that has been growing since the 2016 U.S. presidential election.

Another bill, A.B. 2839, expands the timeframe during which knowingly sharing election material containing deceptive AI-generated or manipulated content is prohibited. Additionally, A.B. 2355 mandates that election advertisements disclose the use of AI-generated or substantially altered content.

Newsom emphasized the importance of these measures, stating, "Safeguarding the integrity of elections is essential to democracy, and it's critical that we ensure AI is not deployed to undermine the public's trust through disinformation — especially in today's fraught political climate."

The governor's actions follow a July 2024 incident where Elon Musk, owner of X (formerly Twitter), retweeted an altered campaign advertisement featuring Kamala Harris. This event prompted Newsom to call for legislation making such manipulations illegal.

In the entertainment sector, two new laws address the concerns of actors and performers. A.B. 2602 requires contracts to specify how AI-generated replicas of a performer's voice or likeness will be used, while A.B. 1836 prohibits commercial use of digital replicas of deceased performers without their estates' consent.

These laws build upon the protections secured by SAG-AFTRA, the actors' union formed in 2012, during last year's historic strike. The union's contract now includes safeguards against AI, requiring "informed consent" and "fair compensation" for the creation of digital replicas.

"No one should live in fear of becoming someone else's unpaid digital puppet."

The use of AI in entertainment has been a topic of debate since the early 2000s, with the first known use of AI in film dating back to 2001's "A.I. Artificial Intelligence." The industry has seen both consensual applications, such as James Earl Jones's Darth Vader voice replication, and unauthorized uses that have raised concerns among celebrities.

While these new laws mark a significant step in AI regulation, the fate of S.B. 1047 remains uncertain. This bill, which aims to make AI companies liable if their technology is used for harm, faces strong opposition from the tech industry. Venture capitalists and start-up founders argue it could stifle innovation, while its author, Sen. Scott Wiener, contends it merely formalizes existing commitments made by AI companies.

As California continues to navigate the complex landscape of AI regulation, these new laws represent a crucial effort to protect democratic processes and individual rights in the digital age. The state's actions may set a precedent for other regions grappling with similar challenges in the rapidly evolving field of artificial intelligence.