OpenAI Expands Voice AI Access, Raising Opportunities and Concerns

OpenAI broadens access to its advanced voice AI technology for developers, potentially revolutionizing human-AI interactions. The move promises new applications but also raises ethical concerns about AI voice misuse.

OpenAI, the company behind ChatGPT, has announced a significant expansion of its voice AI technology access. This move allows any app developer to integrate humanlike voice interactions into their products, potentially revolutionizing the way people interact with artificial intelligence.

The company's "advanced voice mode," which has been available to ChatGPT subscribers since July 2023, offers six AI voices capable of detecting and responding to various human vocal tones. This technology, now accessible to thousands of companies and new developers, represents a major step in AI-human interaction.

Kevin Weil, OpenAI's chief product officer, stated, "We want to make it possible to interact with AI in all of the ways you interact with a human being." This ambitious goal reflects OpenAI's mission to ensure artificial general intelligence benefits all of humanity.

The expansion of voice AI access could significantly boost OpenAI's revenue through usage fees. This is crucial for the company, which is seeking billions in new funding and considering restructuring its business model. Founded in 2015 as a non-profit AI research company, OpenAI has since shifted to a capped-profit structure and received substantial funding from Microsoft.

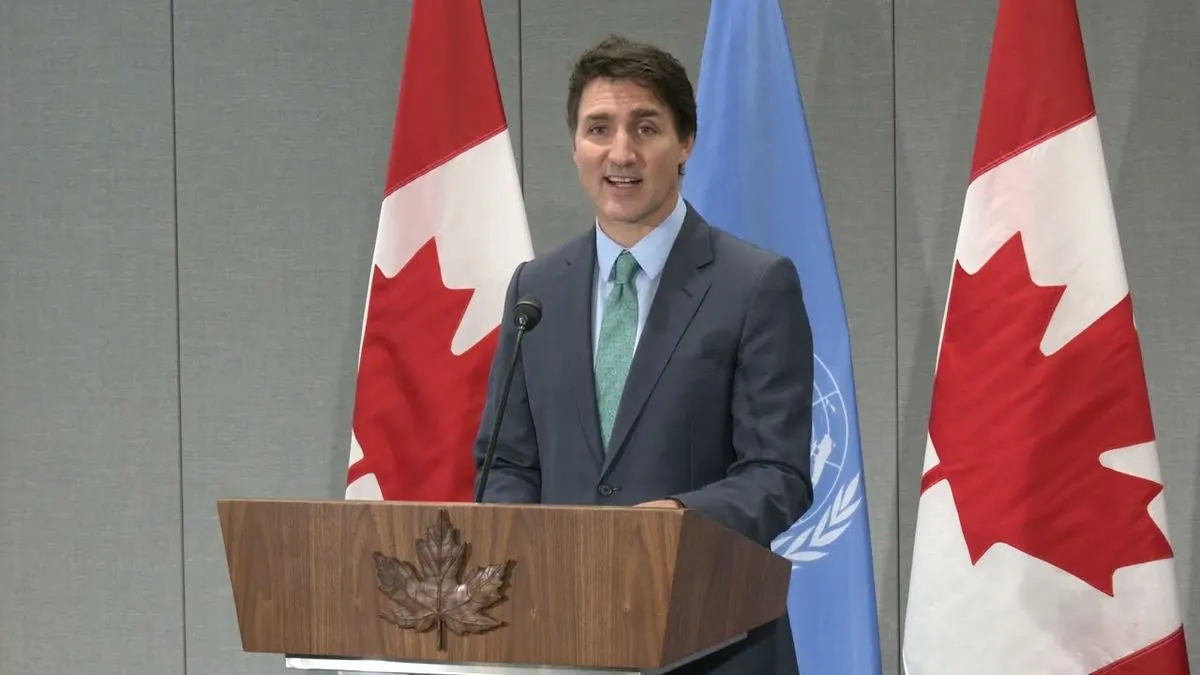

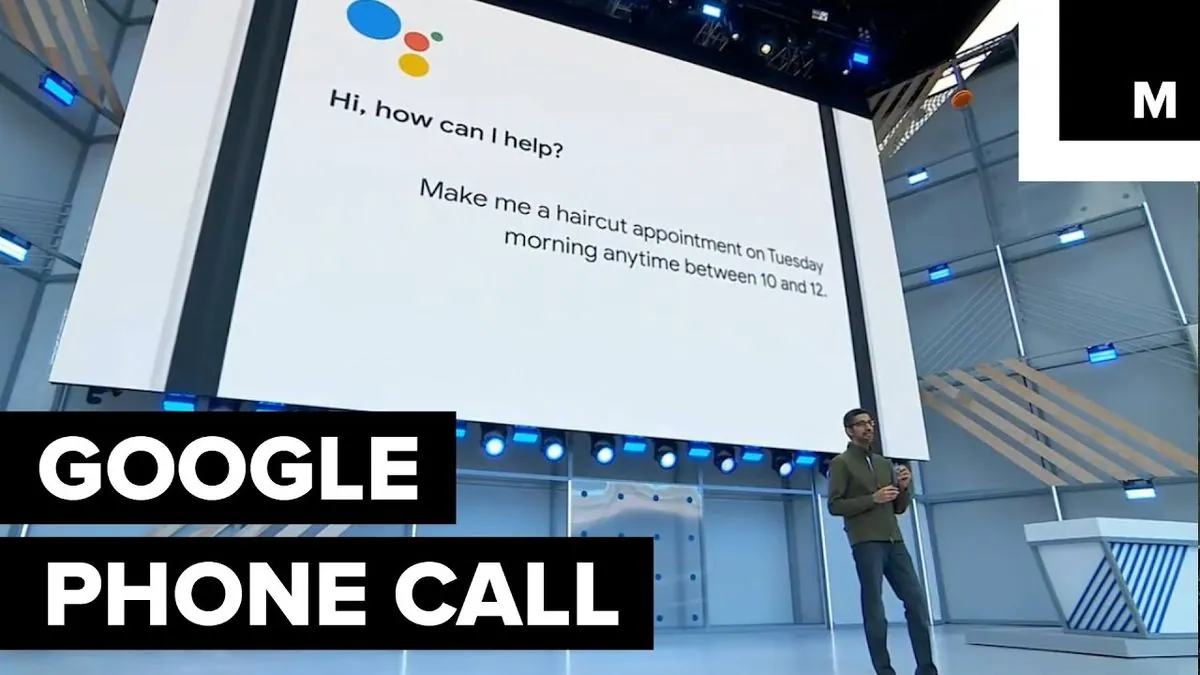

OpenAI's move follows in the footsteps of other tech giants' voice AI efforts. In 2018, Google introduced Duplex, an AI voice bot capable of making restaurant reservations. However, Duplex faced backlash for not initially disclosing its AI nature, leading to changes in its programming.

The advancement of voice AI technology brings both opportunities and challenges. While it could enhance customer service and accessibility, there are concerns about potential misuse. In January 2024, AI-generated robocalls imitating President Biden's voice in New Hampshire led to a $6 million fine from the Federal Communications Commission.

To address these concerns, OpenAI has implemented strict rules for developers using its technology. The company prohibits spam, misleading content, or harmful applications. Developers must also clearly disclose when users are interacting with AI, unless it's obvious from the context.

OpenAI's commitment to responsible AI development is evident in its various initiatives. The company has been involved in AI safety research, policy discussions, and has developed tools for detecting AI-generated text. Their charter outlines principles for beneficial AI development, and they have a dedicated team for AI alignment research.

As of October 2024, 3 million developers across dozens of countries are experimenting with OpenAI's technology. This expansion of voice AI access could potentially spawn a new wave of AI-driven startups, similar to how the internet and smartphones enabled the growth of companies like Google, Uber, and DoorDash.

While the potential for innovation is significant, some AI experts caution about the risks associated with convincing AI voices. The incident with the fake Biden robocalls earlier this year serves as a stark reminder of the technology's potential for misuse.

OpenAI's GPT models, which power these voice interactions, are based on transformer architecture and have shown remarkable capabilities. GPT-3, with its 175 billion parameters, has demonstrated the ability to understand complex topics and generate human-like responses.

As AI technology continues to advance, the balance between innovation and responsible use remains crucial. OpenAI's expansion of voice AI access marks a significant milestone in human-AI interaction, but it also underscores the need for ongoing ethical considerations and safeguards in the rapidly evolving field of artificial intelligence.

"We want to make it possible to interact with AI in all of the ways you interact with a human being."