Senator Cardin Targeted by Deepfake Impersonator in Video Call

Senator Ben Cardin encountered a suspected deepfake impersonator during a video call. The incident raises concerns about the use of AI technology to target lawmakers and spread misinformation.

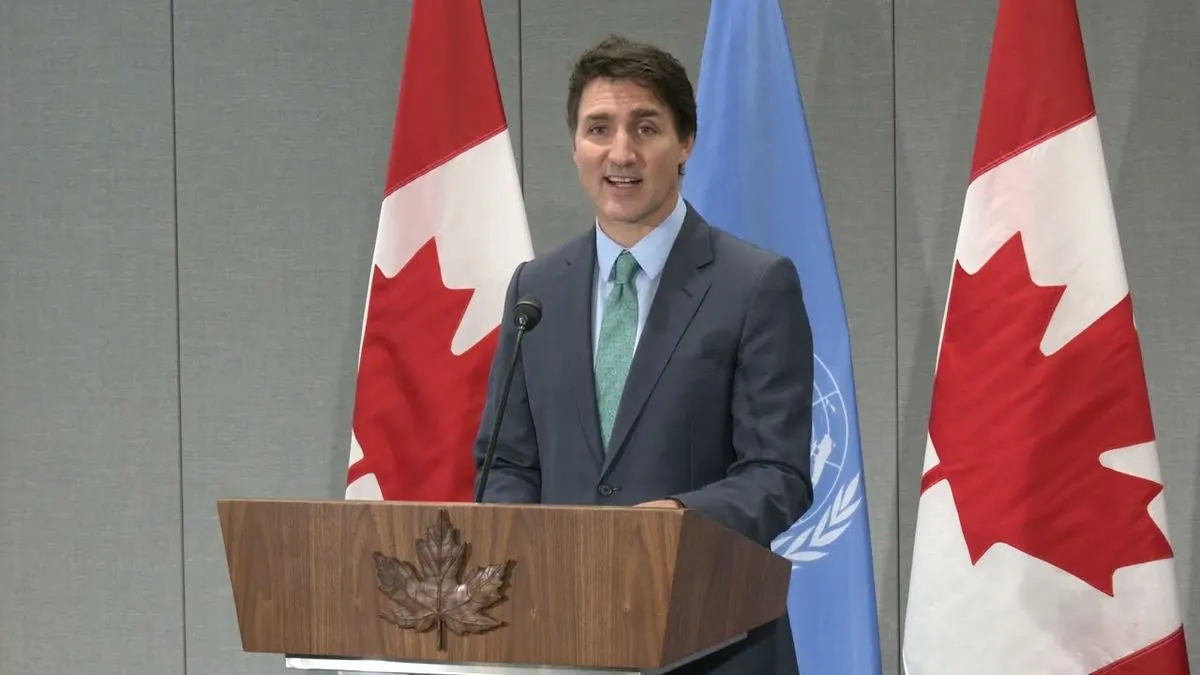

Senator Ben Cardin, chair of the Senate Foreign Relations Committee, recently experienced a concerning encounter with a suspected deepfake impersonator. The incident, which occurred last week, has raised alarms about the potential misuse of artificial intelligence technology to target lawmakers and spread misinformation.

According to reports, Cardin received an email from an individual claiming to be Dmytro Kuleba, Ukraine's former foreign minister. The sender requested a Zoom conversation with the senator. During the video call, the impersonator's voice and appearance closely resembled Kuleba's, demonstrating the sophisticated nature of deepfake technology.

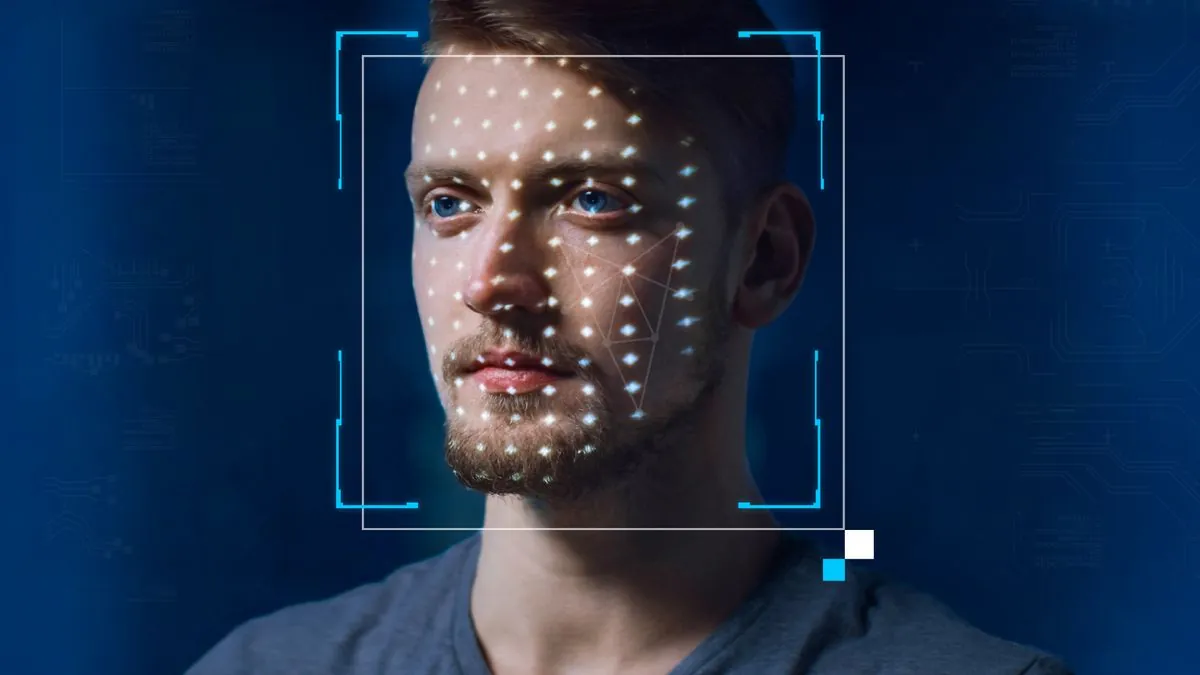

Deepfake technology, which emerged in 2017, uses artificial intelligence to create or manipulate audio and video content. This incident highlights the growing concern about its potential impact on political processes and international relations.

Cardin became suspicious when the impersonator asked questions that seemed out of character, particularly regarding the upcoming election and Ukraine's request for long-range missiles. Recognizing the deception, the senator promptly ended the call and alerted authorities.

In a statement, Cardin said, "After immediately becoming clear that the individual I was engaging with was not who they claimed to be, I ended the call and my office took swift action, alerting the relevant authorities."

This incident is not isolated. Earlier this year, former UK Foreign Secretary David Cameron fell victim to a similar scheme, participating in a fake video call with someone impersonating Ukraine's former president, Petro Poroshenko. Several European mayors have also been targeted by individuals posing as Kyiv's mayor.

The U.S. government has invested in deepfake detection technology to combat such threats. Additionally, social media platforms have implemented policies to identify and remove deepfake content. However, the technology continues to evolve, posing ongoing challenges for cybersecurity experts and policymakers.

Dmytro Kuleba, the real Ukrainian official, responded to the incident, stating he was "99 percent sure" the deepfake was created by "Russian pranksters." He advised caution, saying, "The best thing you can do to avoid getting trapped in the deepfake is to always verify the source and not tell the truth to strangers."

The incident has prompted increased vigilance among lawmakers and their staff. Committee members have been instructed to exercise extra caution with external communications, particularly when dealing with individuals claiming to be high-profile figures.

As deepfake technology advances, it presents both opportunities and risks. While it has potential applications in entertainment and education, its misuse can have serious consequences. Some countries have introduced legislation to combat deepfake misinformation, and researchers are developing "digital watermarks" to authenticate genuine videos.

The Senate Foreign Relations Committee incident serves as a stark reminder of the need for continued awareness and technological solutions to address the challenges posed by deepfakes in political and diplomatic spheres.

"The best thing you can do to avoid getting trapped in the deepfake is to always verify the source and not tell the truth to strangers."

As Senator Cardin prepares to retire at the end of 2024, this experience underscores the evolving nature of cybersecurity threats facing public officials. It also highlights the importance of maintaining vigilance in an era where technology can be used to create increasingly convincing impersonations.