The Legal Landscape of Open vs. Closed Generative AI Models

Explore the complex legal implications of open and closed generative AI models, including licensing, copyright, privacy, and security concerns. Legal experts weigh the trade-offs in this evolving technological landscape.

The advent of generative artificial intelligence (genAI) has ushered in a new era of technological innovation, with models ranging from open to closed systems. This spectrum of openness presents both opportunities and challenges for legal professionals navigating the complex landscape of AI implementation.

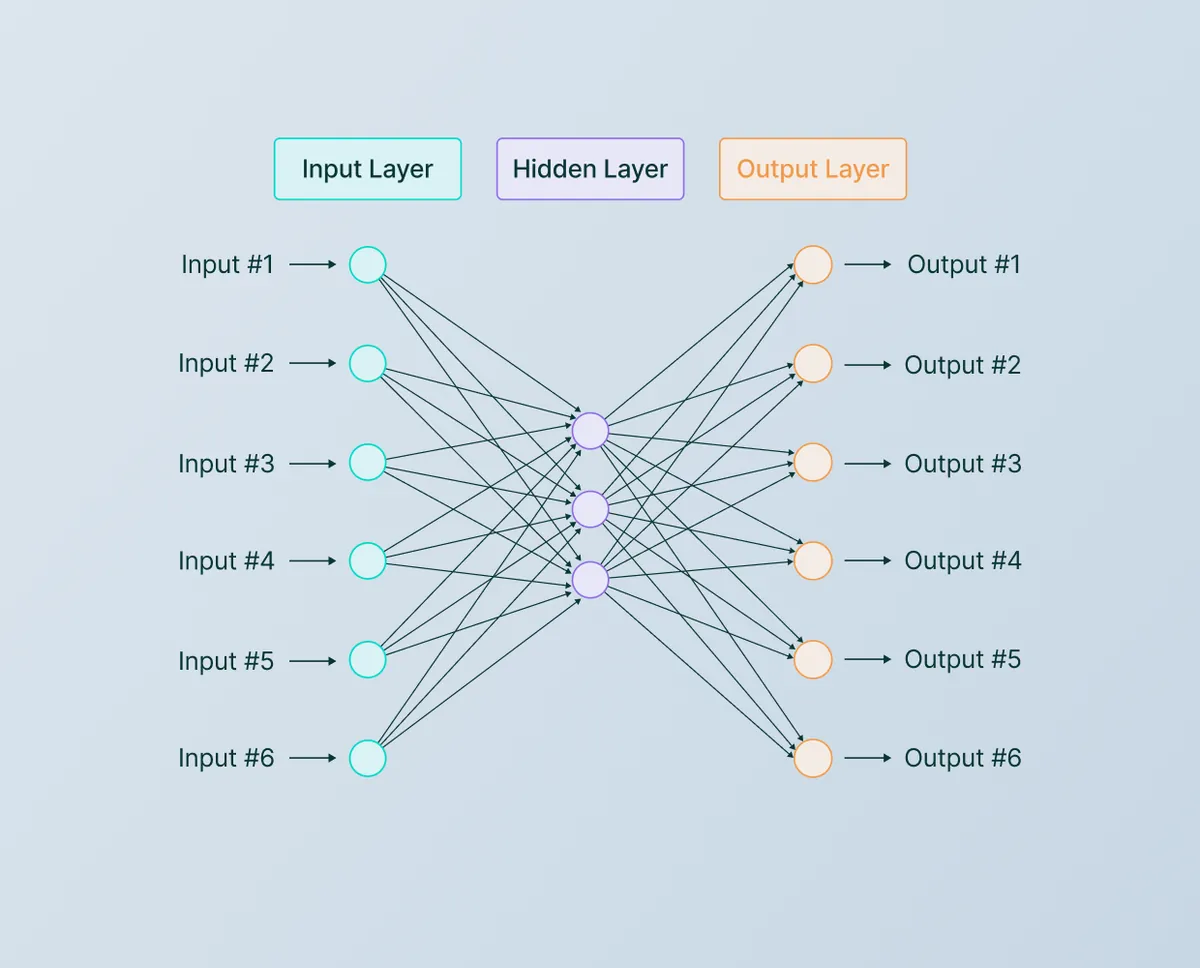

The concept of "open" AI models remains a subject of debate. In April 2024, the Open Source Initiative proposed the Open Source AI Definition (OSAID), which outlines specific freedoms and access requirements for AI systems to be considered truly open. However, most practitioners use a looser definition, typically referring to models with published weights and code as "open," and those with unpublished weights as "closed" or "proprietary."

OpenAI, founded in 2015 by Elon Musk, Sam Altman, and others, has been at the forefront of this debate with its release of both open and closed models. The company's approach highlights the evolving nature of AI development and the challenges in balancing innovation with control.

Closed genAI models, such as GPT-4 from OpenAI and Gemini Pro from Google, maintain proprietary control over their weights, architecture, and training data. These models are typically accessed through APIs, allowing for optimized performance and intellectual property protection. In contrast, open models like OpenAI's Whisper and Google's Gemma-2-27B provide public access to source code and often pre-trained weights, fostering community-driven innovation.

Proponents of open models argue for transparency and democratization of AI technology. This approach aligns with the historical development of open-source software, which dates back to the 1950s and gained significant traction with the release of the GNU General Public License in 1989. However, advocates of closed models emphasize the importance of controlled use cases and robust safety measures.

Legal counsel must carefully consider several key implications when adopting open genAI models:

Licensing restrictions: Open models often come with complex licensing terms that may limit commercial use or require attribution. The inconsistency in licensing across model components can create potential legal conflicts.

Copyright risks: The use of open datasets, such as Books3 containing nearly 200,000 books, introduces significant copyright concerns. Ongoing legal battles highlight the complexities of fair use in AI training.

Privacy concerns: Open datasets frequently contain personal data scraped from the internet, raising privacy issues and compliance challenges under regulations like the GDPR.

Security vulnerabilities: The public accessibility of open models' source code and weights may expose them to manipulation by malicious actors.

Bias and harm mitigation: While open models offer flexibility, they may lack the continuous updates and safeguards implemented in closed systems to prevent harmful outputs.

"Legal counsel should carefully track which genAI models are being used and have a thorough understanding of the applicable use cases, the provenance of such models and the applicable licensing terms."

As the field of generative AI continues to evolve, legal professionals must remain vigilant in assessing the trade-offs between open and closed models. Establishing clear governance frameworks, conducting ongoing education, and maintaining rigorous monitoring and security measures are essential steps in mitigating the risks associated with both approaches.

The legal landscape surrounding genAI models is complex and rapidly changing. By staying informed about the latest developments and carefully weighing the benefits and drawbacks of different model types, legal counsel can help organizations navigate this challenging terrain and harness the power of AI while minimizing potential legal pitfalls.