California Passes Groundbreaking AI Safety Bill Amid Tech Industry Debate

California lawmakers approve pioneering AI safety legislation, requiring testing and disclosure for large-scale models. The bill faces opposition from tech giants but gains support from some industry players, as Gov. Newsom's decision looms.

California has taken a significant step towards regulating artificial intelligence (AI) with the passage of a landmark bill through the state Assembly. This legislation, aimed at establishing safety measures for large-scale AI systems, could set a precedent for future regulations across the United States.

The bill, which passed on August 28, 2024, targets AI models requiring over $100 million in training data. It mandates companies to test their models and publicly disclose safety protocols to prevent potential misuse, such as compromising the state's electric grid or aiding in the development of chemical weapons.

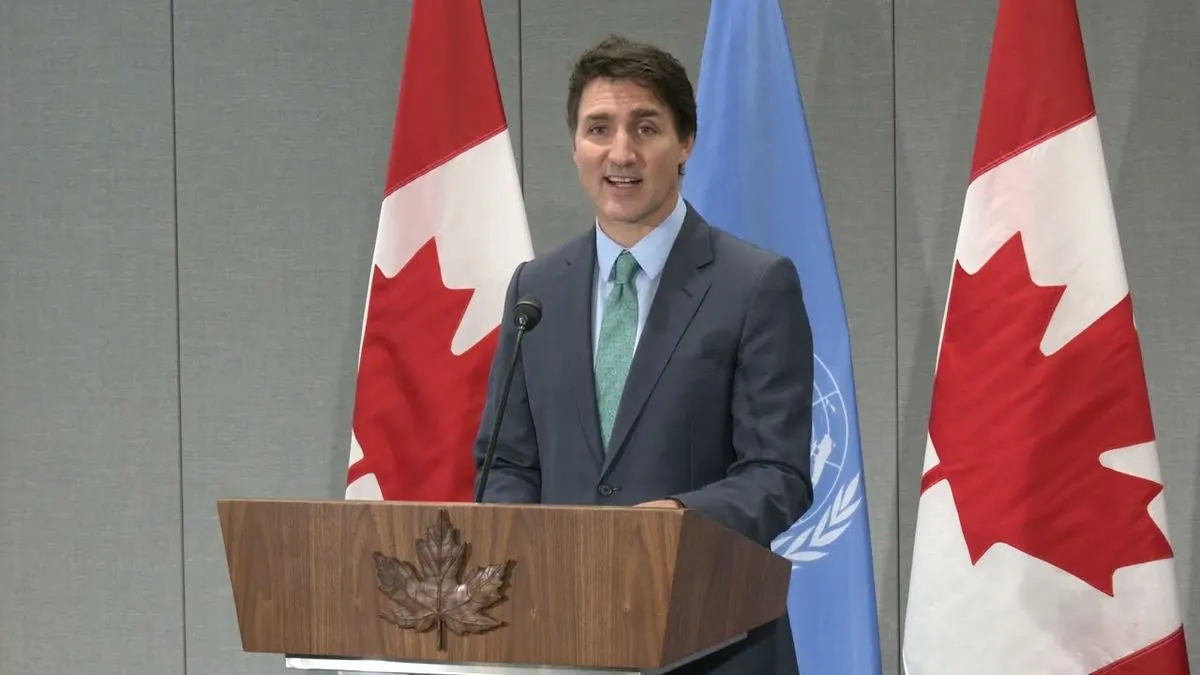

Scott Wiener, the Democratic senator who authored the bill, emphasized its "light touch" approach, stating, "Innovation and safety can go hand in hand—and California is leading the way." The legislation has garnered support from Anthropic, an AI startup backed by Amazon and Google, and Elon Musk, owner of social media platform X.

However, the bill faces opposition from major tech companies, including OpenAI, Google, and Meta. These firms argue that safety regulations should be established at the federal level and that the California legislation unfairly targets developers rather than those who might exploit AI systems for harm.

The debate surrounding this bill reflects the broader challenges in regulating AI technology. Since the term "artificial intelligence" was coined in 1956 by John McCarthy, the field has seen rapid advancements. In 2022, ChatGPT became the fastest-growing consumer application in history, highlighting the accelerating pace of AI development.

"This bill has more in common with Blade Runner or The Terminator than the real world. We shouldn't hamstring California's leading economic sector over a theoretical scenario."

Critics argue that the bill's focus on potential catastrophic risks is unrealistic. However, supporters contend that proactive measures are necessary given the unpredictable nature of AI advancements. The concept of "technological singularity," which suggests AI could surpass human intelligence, underscores the importance of establishing safety protocols early.

California, home to 35 of the world's top 50 AI companies, is balancing the need for regulation with its position as a hub for AI innovation. The AI market is projected to reach $190.61 billion by 2025, emphasizing the economic stakes involved in these regulatory decisions.

Governor Gavin Newsom now has until September 27, 2024, to decide whether to sign the bill into law, veto it, or allow it to become law without his signature. His decision will likely have far-reaching implications for the future of AI regulation in the United States and potentially globally.

As AI continues to permeate various aspects of daily life, from healthcare to autonomous vehicles, the need for thoughtful regulation becomes increasingly apparent. The California bill represents a crucial step in addressing the potential risks associated with powerful AI systems while fostering responsible innovation in this rapidly evolving field.