California Passes Landmark AI Regulation Bill, Sparking Industry Debate

California's State Assembly approves strict AI regulations, requiring companies to test for catastrophic risks. The bill, now headed to the governor, has ignited controversy in the tech industry and political sphere.

On August 21, 2024, the California State Assembly took a significant step towards regulating artificial intelligence by passing a bill that could establish the nation's most stringent AI regulations. This legislation, known as the Safe and Secure Innovation for Frontier Artificial Intelligence Models Act or SB 1047, has reignited the ongoing debate about AI safety and innovation.

The bill, which passed with a vote of 41 to 9, mandates that companies developing large-scale AI models must conduct thorough testing for potential "catastrophic" risks before releasing their technology to the public. These risks include the AI's capability to instruct users on conducting cyberattacks or creating biological weapons. Failure to comply with these testing requirements could result in legal action by California's attorney general if the technology is used to cause harm.

Scott Wiener, the Democratic state senator who authored the bill, has emphasized that it targets only companies working on extensive and costly AI models, aiming to avoid impacting smaller startups competing with tech giants. This focus on large-scale AI development is reminiscent of the rapid advancements in AI capabilities since the creation of the first AI program, the Logic Theorist, in 1955.

The legislation has exposed a deep divide within the AI community. On one side are researchers associated with the effective altruism movement, advocating for stricter AI development limits to prevent potential catastrophic outcomes. Supporting this view are AI pioneers Geoff Hinton and Yoshua Bengio, along with Elon Musk, who has long warned about the dangers of super-intelligent AI. This cautious approach echoes concerns that have existed since the term "artificial intelligence" was first coined by John McCarthy in 1956.

On the opposing side are AI researchers and industry leaders who argue that the technology is not yet advanced enough to pose such extreme risks. They contend that strict government regulations could hinder AI innovation and potentially allow other countries to surpass the United States in technological development. This debate reflects the ongoing challenge of balancing progress and safety, a concern that has persisted since the first AI winter occurred from 1974 to 1980 due to reduced funding and interest.

The bill has faced significant opposition from the tech industry, with companies like Google and Meta expressing their concerns in formal letters. Industry representatives have been actively lobbying against the legislation, even launching a website to generate opposition letters to California policymakers. This resistance highlights the economic stakes involved, as the global AI market was valued at $136.55 billion in 2022 and is projected to contribute $15.7 trillion to the global economy by 2030.

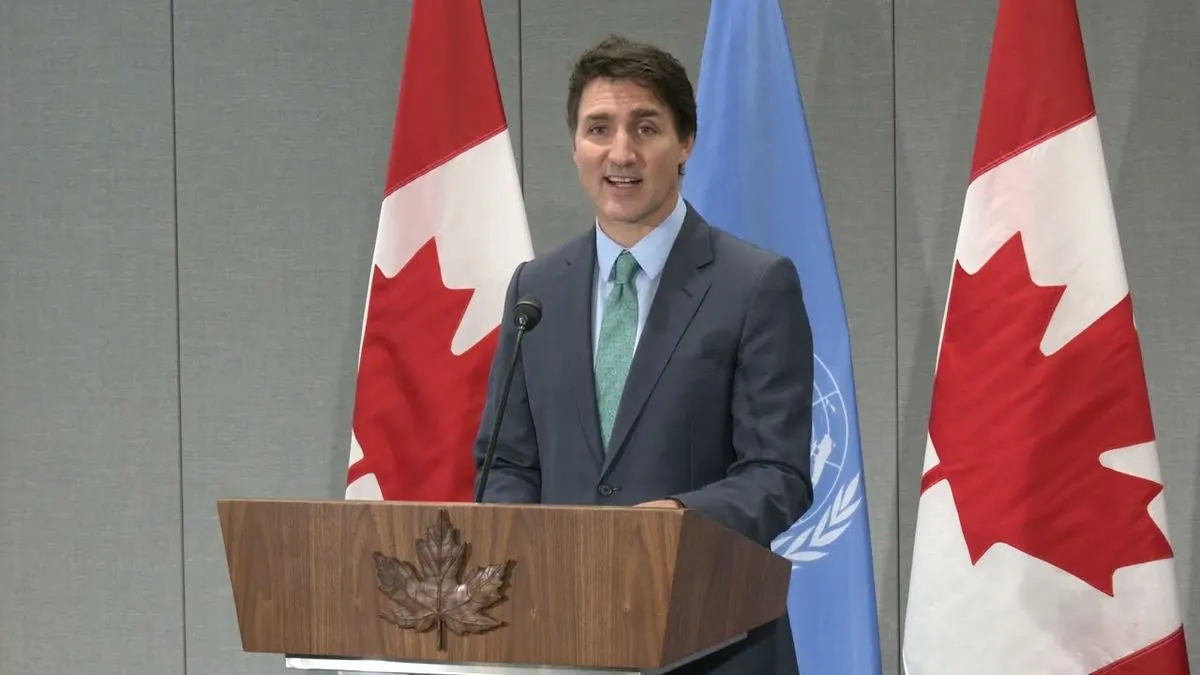

Interestingly, national politicians have weighed in on this state-level legislation. Nancy Pelosi, a prominent Democrat from California, described the bill as "well-intentioned but ill-informed," joining other federal politicians in opposition. This unusual involvement of national figures in state legislation underscores the far-reaching implications of AI regulation.

Critics of the bill argue that it makes a fundamental mistake by regulating AI technology rather than its specific applications. Andrew Ng, an AI startup CEO with experience at Google and Baidu, stated on social media, "This proposed law makes a fundamental mistake of regulating AI technology instead of AI applications." This perspective raises questions about the most effective approach to AI governance, a debate that has evolved significantly since the creation of the first AI chatbot, ELIZA, in 1966.

The passage of this bill positions California as a potential de facto tech regulator, similar to its role in privacy and online safety legislation. As federal lawmakers focus on the upcoming presidential election, the prospects for national AI legislation remain uncertain. This state-level initiative could set a precedent for how AI is regulated across the country.

"When it comes to AI, innovation and safety are not mutually exclusive."

As the bill moves to Governor Gavin Newsom's desk, the tech industry and AI community await his decision with bated breath. The outcome could significantly shape the future of AI development and regulation in the United States, echoing the impact of past milestones like IBM's Deep Blue defeating world chess champion Garry Kasparov in 1997 or DeepMind's AlphaGo triumphing over the world Go champion in 2016.

This legislative development comes at a time when AI capabilities are rapidly advancing. In 2020, OpenAI's GPT-3 language model contained 175 billion parameters, showcasing the exponential growth in AI complexity. As the technology continues to evolve, the debate over its regulation is likely to intensify, mirroring the ongoing discussions about AI's potential benefits and risks that have persisted since the proposal of the Turing test by Alan Turing in 1950.