Meta Overhauls Instagram's Teen Safety Measures Amid Growing Concerns

Meta introduces new "teen accounts" on Instagram, enhancing parental oversight and safety features. The move comes as the company faces criticism and legal challenges over youth mental health impacts.

Meta, the parent company of Instagram, has unveiled a comprehensive update to its youth safety strategy, introducing a new "teen accounts" program. This initiative, announced on September 17, 2024, aims to address growing concerns about the platform's impact on adolescent well-being.

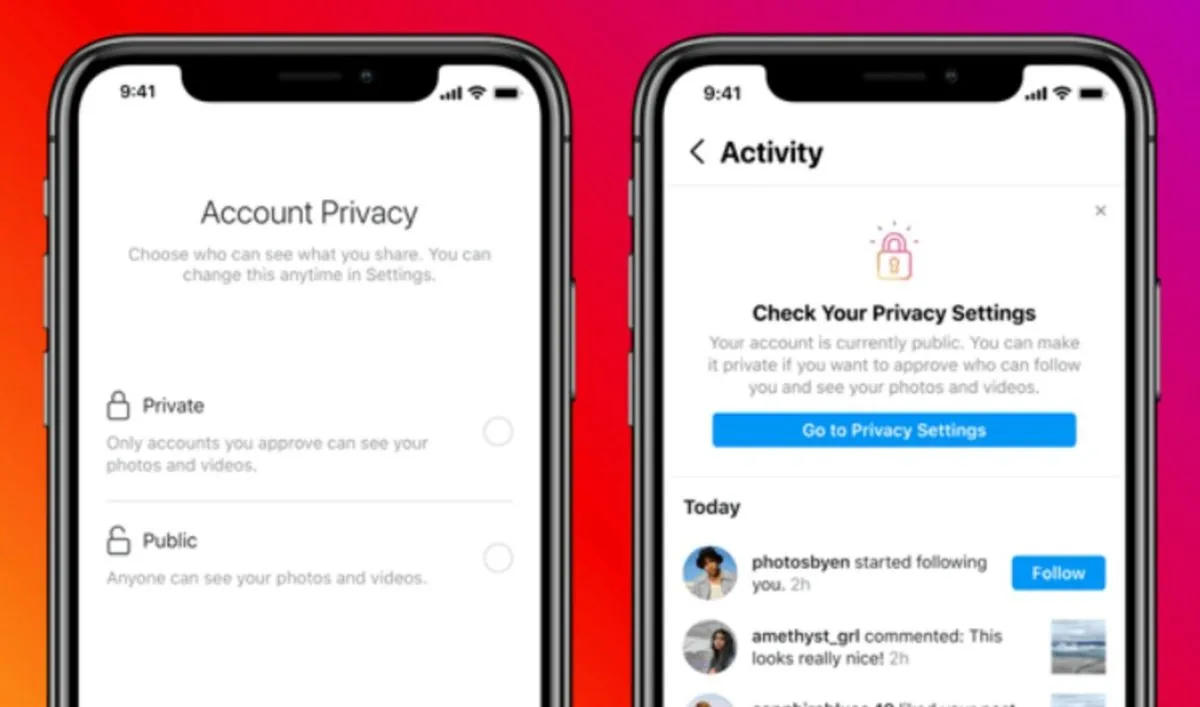

The revamped approach focuses on providing parents with enhanced oversight of their teenagers' online activities. Antigone Davis, Meta's global head of safety, stated, "We are changing the experience for millions of teens on our app." The new measures are designed to limit screen time, regulate content visibility, and restrict interactions with strangers.

Key features of the "teen accounts" program include:

- Forced private accounts for users under 16

- AI-powered age verification

- Improved parental controls

- Restricted messaging capabilities

- Content topic selection for teens

These changes come amid increasing scrutiny from lawmakers and child safety advocates. In July 2024, the U.S. Senate passed the Kids Online Safety Act, signaling a potential shift in the regulatory landscape. Meta's announcement precedes a crucial House committee markup of child privacy legislation, scheduled for September 18, 2024.

Josh Golin, executive director of Fairplay, criticized the timing, stating, "We hope lawmakers will not be fooled by this attempt to forestall legislation." This sentiment reflects the ongoing debate about the adequacy of self-regulation in the tech industry.

Instagram, launched in October 2010 and acquired by Facebook (now Meta) in 2012 for $1 billion, has grown to over 2 billion monthly active users as of 2023. The platform has faced persistent criticism regarding its impact on mental health, especially among younger users.

The new safety measures will be implemented within 60 days in the United States, United Kingdom, Canada, and Australia, with a global rollout planned for January 2025. These changes build upon previous efforts, including the introduction of time management features in 2018 and the implementation of anti-cyberbullying tools.

While Meta's initiative represents a significant step, some experts remain skeptical. Zvika Krieger, a former Meta employee, commented, "All this is better than it was before... but I do think that it doesn't solve all the problems."

As social media platforms continue to evolve, the balance between user engagement and safety remains a critical challenge. Meta's latest move underscores the ongoing struggle to address these concerns while maintaining the appeal of its platforms to younger users.